Lecsicon

By ¡wénrán zhào!

Please experience this piece in fullscreen at this link.

Below is a prompt the artist wrote for GPT 3.5 Turbo, the large language model that currently drives ChatGPT. In response, the model creates acrostics but in sentence form. Thousands of word-sentence pairs compose this piece, Lecsicon: Linguists Enthusiastically Catalog Symbols, Interpreting Carefully Occurred Nuances.

“I will give you a word. Make a sentence or phrase with a series of words whose first letters sequentially spell out my word. Your sentence doesn’t have to have a strong semantic connection with my word.

Here are some examples:

Cake – Creating amazing kitchen experiences.

Fire – Fierce inferno razed everything.

Smile – Some memories invoke lovely emotions.Now make a sentence for /WORD/.”

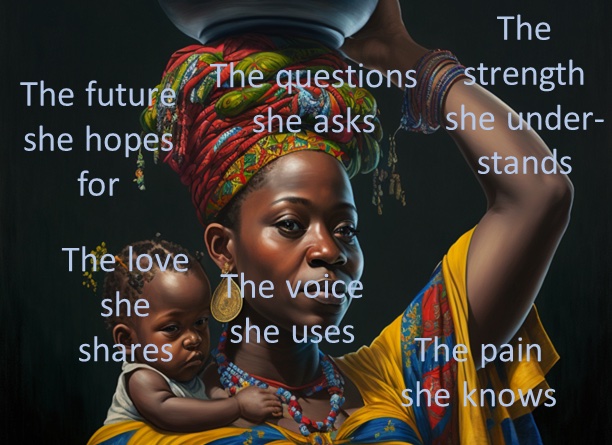

Language games like acrostics captivate the mind with hidden complexities behind the simple mechanisms. In Lecsicon, the resulting sentences offer a broader context for the original words. Many of the sentences perform similarly to Chomsky’s “colorless green ideas sleep furiously,” but others, such as “Music: Many unexpected sounds indicate creativity” and “Date: Dinner and theater experience,” seem to flesh out how the units of meaning (letter, word, sentence, paragraph) feed into each other and meaning bleeds through the edges–the fractal character of the English language. The model captures words based on their interrelations through a balanced computation process, like a ghost hunting through a latent vector space of words. In such a space, a word only exists in its associations with other words, and sentences emerge from the context.

Upon visiting the Lecsicon web page, users can see the word-sentence pairs typed out letter-by-letter by a web-based program. Starting with a random word, the program searches the Lecsicon database for a word that has the smallest Levenshtein distance from the preceding one. Adjusting the temperature slider results in a stronger or weaker spelling similarities between the consecutive words displayed. Typing speed is also influenced by temperature-in the same way as molecular motion is.

Note:

The accuracy of GPT’s responses was not guaranteed. Sometimes, one or two extra words slip into the sentence. In extreme cases, the model rambles on and loses track of the initial instructions. Out of over 27,000 fetches, 7,828 results strictly follow the rules. This piece only displays those successful entries.